Stable Diffusion

I trying to finetune the stable diffusion model using LoRA training. My target is tune the customize faces to build up the virtual agent and impersonate a real person which means I need to develop many pipeline for automation post the story, post and so on. But anyway, we need to train the model first.

Limitation

Generally, the people always post the close-up images lead to the model generate the full body image may contains some mistakes.

Step 1: Dataset preparing

I use ig-follower to download all images of post in instagram which is developed by myself.

Useful link

| URL | Remark |

|---|---|

| birme.net | Resize and Crop Image Webpage |

- Preapre at least 15 images and contains different light, direction or environment

- For improve the training, I strongly recommand following things

- Pick some more simple background image and don't have any things is covered the face included the fingers, hand and so on. (Glasses is fine, but it must be contain a certain amount of images)

- If your size dataset > ~80 images, you can contains some image is removed the background (original color (different color for each removed bg image, if same color it may overfit))

- Segment Anything for remove the background of image, research by Meta AI

- Crop and resize the Image to square (768x768, 512x512, etc). You can use birme.net to help. The resolution will affect the training result and the hardware requirements.

Random Background color Python Func

def png_add_random_background(image_path:str, out_dir:str):

img = Image.open(image_path)

path = Path(image_path)

if not path.is_file() and not path.suffix.endswith('.png'):

print("This is not a PNG file")

return

background_color = random.choice(background_colors)

image_size = img.size

if img.size[0] > img.size[1]:

image_size = (image_size[0],image_size[0])

elif image_size[1] > image_size[0]:

image_size = (image_size[1],image_size[1])

bg = Image.new("RGB", image_size, background_color)

bg.paste(img, mask=img.split()[-1])

if out_dir[-1] != "/":

out_dir += "/"

print(path.stem)

bg.save(f"{out_dir}{path.stem}.png", "PNG")Step 2: Training Config

The following infromation based on Colab to setup the training environment. Colab free is not allowed for stable-diffusion now.

In this step, I will use the kohya-LoRA-dreambooth.ipynb

Latest version of kohya-LoRA-dreambooth.ipynb can be found at here.

ref: Stable Diffusion Art

Reminder for training

- chilloutmix_NiPrunedFp32Fix, this model will more effective in asian people generally

- (Optional) To improve the model accuracy, we can edit the prompt for each image although we will use the Waifu Diffusion Tagger to generate the prompt but sometime the promote is wrong and missing some important keywords like "glasses", "full body" and etc.

- In the LoRA and Optimizer Config, if you have ~15 images you can select small size of

network_dimandnetwork_alphathe model size will around 16MB. However, if you have >50 images please chosen large size of above parameters. - In

num_epochs, it may at least 100 based on some discussion on Internet - If you have large dataset, please use A100 or more powerful GPU. Time is money! From my exp., T4(~7hours) vs A100(~1hour)

Details of trainning setting you can check my colab notebook: here

Video Tutorial

Youtube: guildline (Cantonese)

Youtube: latest Update

Result of Training

Fineturned model you need to put it to stable-diffusion-webui/models/Lora/

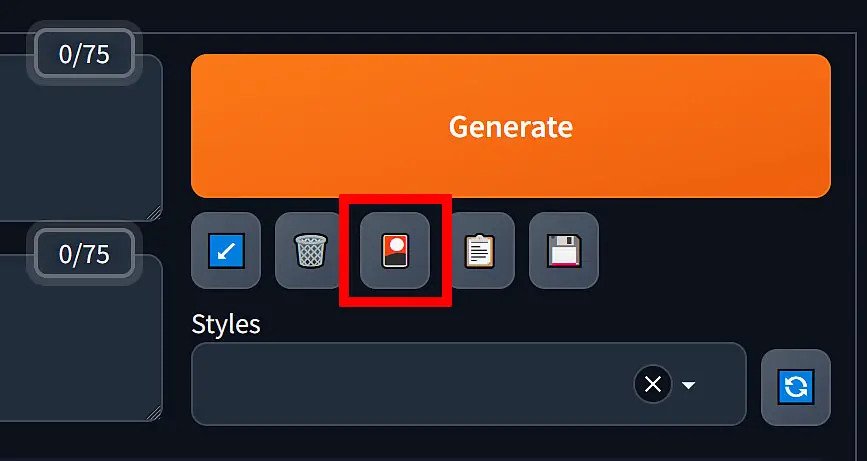

You will find the LoRA at

The following is details of configuration of generated image.

Positive Prompt

<lora:model_t1dataset_v1-000225:1>, (((t1model))), ((1girl)) portrait photo of 8k ultra realistic, lens flare, atmosphere, glow, detailed, intricate, full of colour, cinematic lighting, trending on artstation, hyperrealistic, focused, extreme details, cinematic, masterpiece,Negative Prompt

(nsfw), paintings, sketches, (worst quality:2), (low quality:2), (normal quality:2), lowres, normal quality, ((monochrome)), ((grayscale)), skin spots, acnes, skin blemishes, age spot, glans, extra fingers, fewer fingers (watermark:1.2) white letters:1/1Config

Steps: 60, Sampler: DPM++ 2M Karras, CFG scale: 7, Size: 768x768Result